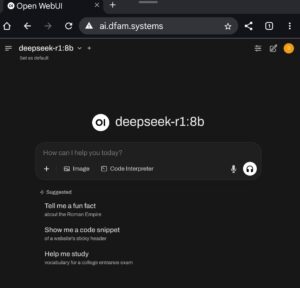

Self Hosting my own LLM with OLLAMA and Stable Diffusion

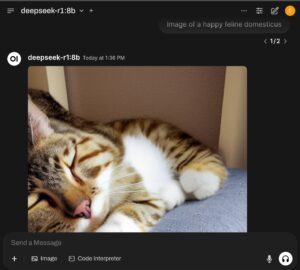

I have been using some form of AI that I have I developed myself for over a decade since achieving a Degree in Artificial Intelligence, Deep Learning and Machine Learning from Stanford and Udacity. The recent advances in production ready AI have made selfhosting a lot easier now. I have set up a rig that's running OLLAMA on my Dell Precision Tower with Dual Xeon CPU's, 256GB ram, a Nvidia Tesla K80 and a Nividia Quadra RTX 4000 GPU's running Windows Server 2020. I have also paired it with a MSI Gaming Laptop running an Intel Core i9 CPU, 64gb Ramm and a Nvidia GeForce RTX 4070 GPU that hosts Stable diffusion and Open Web UI for Image generation and a GUI Dashboard. This is all networked through TailScaled VPN at this time so I can access my AI prompt from any device around the world. I am thinking of adding a Domain and hosting it on a Website platform so a vpn would not be needed for access.

ai.dfam.systems